DeepSynth is a tool for automatically synthesizing programs from examples. It combines machine learning predictions with efficient enumeration techniques in a very generic way.

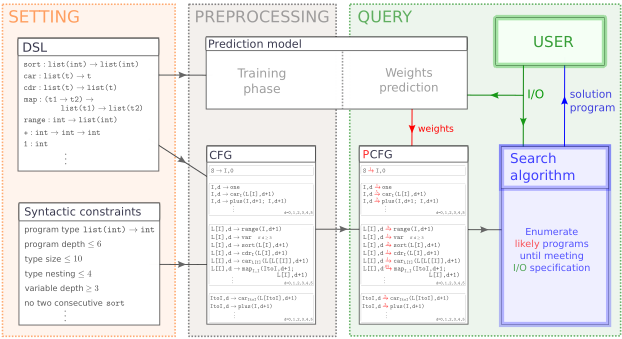

The following figure shows the pipeline.

- The first step is to define a domain-specific language (DSL), which is the programming language specifically designed to solve particular tasks.

- The second step is a compilation step: a context-free grammar (CFG) describing the set of all programs is compiled from the DSL and a number of syntactic constraints. The grammar is used to enumerate programs in an efficient way. However the number of programs grows extremely fast with size, making program synthesis very hard computationally. We believe that the path to scalability is to leverage machine learning predictions in combination with efficient enumeration techniques.

- The third step is to obtain predictions from the examples: a prediction model outputs predictions in the form of probabilities for the rules of the grammar, yielding a probabilistic context-free grammar (PCFG).

- The fourth and final step is the search: enumerating programs from the PCFG. We introduced the distribution-based search as a theoretical framework to analyse algorithms, and constructed two new algorithms: HeapSearch and SQRT Sampling.

DeepSynth was written in Python by Nathanaël Fijalkow, Théo Matricon, Guillaume Lagarde, and Kevin Ellis.

Documentation

We refer to the Github README for a documentation and introductory examples

Contact

For questions, suggestions and comments, you may contact Nathanaël and Théo. Contributions are most welcome!

Citing

Cite DeepSynth in academic publications as:

- Nathanaël Fijalkow, Guillaume Lagarde, Théo Matricon, Kevin Ellis, Pierre Ohlmann, Akarsh Potta.

Scaling Neural Program Synthesis with Distribution-based Search.

AAAI 22: International Conference on Artificial Intelligence. Invited for Oral Presentation.

preprint